By Susannah Darling

NASA Headquarters

Imagine, if you will, that you are driving to your favorite restaurant. The traffic is bad, so you use your GPS to find the best route. To get your current location, your phone or GPS listens to a satellite in the Earth’s upper atmosphere. This satellite sends the GPS system information that allows it to determine where you are and the quickest way to get to your destination.

But sometimes, the signal gets interrupted, the GPS won’t load, or it points you in the wrong direction. Why does this happen?

Ryan McGranaghan, space scientist at ASTRA, LLC and NASA affiliate, tried to tackle this problem by figuring out when a GPS is right and when it’s likely to be wrong. To achieve this, McGranaghan turned to observations from past disturbances in GPS signals. He explored how to use machine learning to try and figure out what made it go haywire in each case.

The main thing he was trying to predict was a phenomenon called ionospheric scintillation. When the electrically-charged part of our atmosphere, known as the ionosphere, becomes too disturbed, it garbles GPS signals that pass through it.

But predicting when a scintillation event is going to happen is no easy task. The atmosphere is a complicated, constantly-changing mix of physics and chemistry, and we still don’t have the ability to consider all factors for predicting when a scintillation event will occur.

To guess the future, look to the past

To start, McGranaghan looked at past data, where we already knew the outcome, and tried to use his algorithm to “guess,” based on a huge number of input variables, whether a given event would cause GPS disruption or not. It’s a bit like solving math problems and then checking your answers at the back of the book.

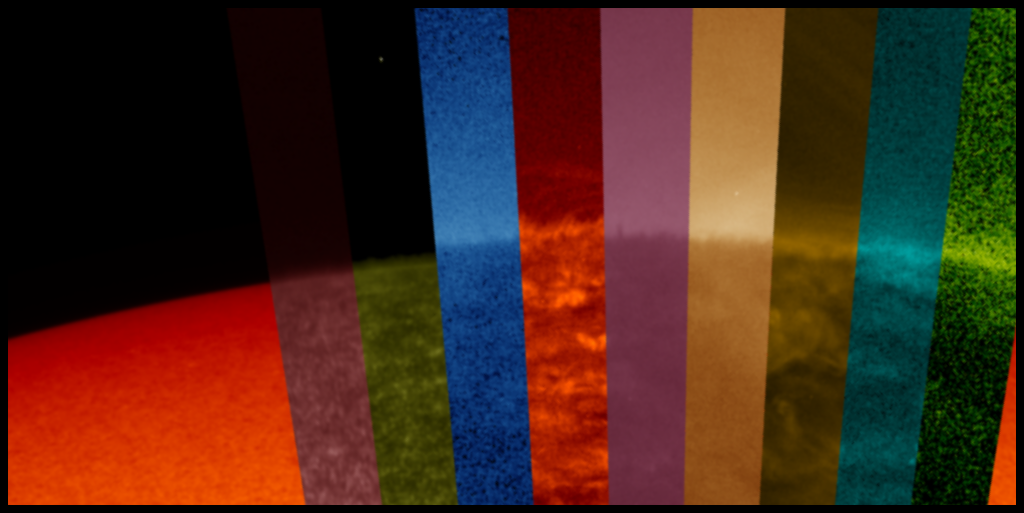

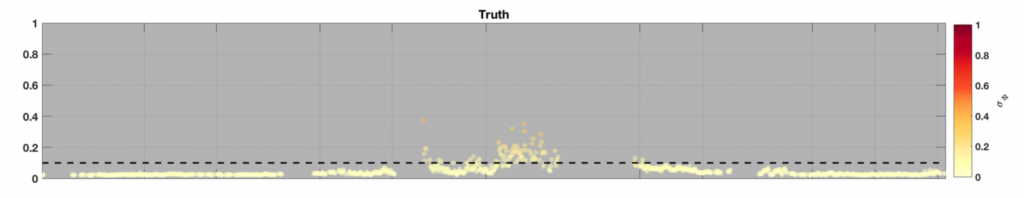

The graph below shows data on scintillation in the ionosphere. The vertical axis shows a calculation of how disturbed the ionosphere is over time, using data from multiple sources. The higher up on the axis, the more disturbed the ionosphere was at the time. (Click on the graph to see a larger version.)

The ionosphere is never perfectly undisturbed — the dots are always above zero — so the black dashed line on the graph is determined by scientists to mark when communication begins getting disrupted. As you can see, towards the middle of the graph particles in the ionosphere wiggled past the threshold, enough to disrupt satellite signals.

That is where machine learning comes in. McGranaghan trained a support vector machine, or SVM, to try and guess the recipe for a scintillation event.

A Support Vector Machine isn’t a real machine, made of metal and gears. Rather, it’s an algorithm, a mathematical procedure that is used to separate complicated data into two groups. In this case, the support vector machine tried to guess, while only looking at the ingredients and not the outcome, which were “scintillation events” — dots that landed above the dashed line — and which “non-scintillation events,” landing below.

To do this, you have to first give the SVM some training data for it to practice on, where you show it both the ingredients and the outcomes. From this training data, it tries to “learn” (hence “machine learning”) which ingredients tend to produce which kind of outcomes, and then come up with a general rule.

After a lot of the training data is fed into the algorithm and it has had plenty of time to practice, then you give it new data. Now you’re showing just the ingredients, keeping the outcome hidden, and it tries to guess. Based on its experience with the training data, how well does it guess?

Understanding the Results

In the case of ionospheric scintillation events, there are a few different kinds of guesses.

There are the two ways it can be right: guessing it was a scintillation event, and it really was — we’ll call that a hit — or guessing that it wasn’t a scintillation event, and it wasn’t — we’ll call that a correct rejection. In the graph below, these are color-coded as follows:

Correct responses

Hit – Green

Correct Rejection – Blue

There are two ways to be wrong as well: guessing that there wasn’t a scintillation event, and there was — a miss — and guessing that there was a scintillation event and there wasn’t — a false alarm.

Incorrect responses

Miss – Red

False Alarm – Yellow

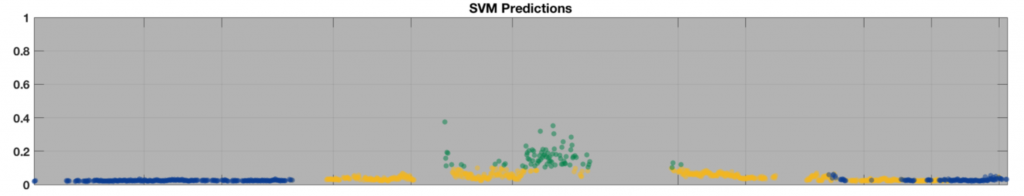

After feeding the data to the algorithm, the SVM made its guesses. We’ll now color-code the same data we saw above, but according to this new color scheme:

As you can see, it looks very similar to the previous graph, now in technicolor. Those colors are the result of the SVM identifying scintillation, and scientists marking how “correct” the SVM was.

The dark blue dots reveal where the SVM correctly identified that it was not a scintillation event. If the SVM had incorrectly identified that there was no scintillation event — a miss — the color would be red.

The green dots are cases where the SVM correctly identified that scintillation is happening. Notice that it correctly identified all the dots that were above the dashed line as scintillation events. But also notice the yellow dots. Those mean the SVM incorrectly identified those data points as scintillation — a little overzealous in identifying an event as scintillation. These false alarms mean the SVM is predicting scintillation when it is not occurring, at least not to a degree that would interrupt signals.

The Future of Scintillation Predictions

This is just the beginning of a potentially powerful tool for predicting ionospheric scintillation. In the future, the SVM algorithm could be taught to be more careful about what it labels as scintillation; or, another machine learning algorithm could be applied to get more accurate results.

Regardless, it would be up to the scientist reading the predictions to make the final decisions: both when the scintillation events could occur, and the best way to manage the loss of communication with the satellite.

Credit: NASA

Credit: NASA