A SHOT IN THE DARK

Chasing the aurora from the world’s northernmost rocket range

Part II

I • II • III • IV • V • VI • VII

At 3:45 am, the van rumbled over a snow-covered road away from the team’s dormitories. The launchpad was a ten-minute ride outside the town limits. Ny-Ålesund’s boundaries are marked with triangle-shaped signs, outlined in red, encasing the silhouette of a polar bear. “STOP!” they read, “Do not walk beyond this sign without your firearm.”

So far, residents had delivered nothing more than warning shots, but that summer’s 11 polar bear sightings kept them on their guard. Lately, the biggest nuisance had been a large male, nicknamed Whitey, who destroyed several of the vacation cabins used during warmer months. Half-joking “Wanted” posters hanging inside the mess hall show a picture of him, snout protruding through a cabin window. He is on the inside, looking out.

As the van approached the launchpad, tufts of snow skimmed across the ground like tiny clouds on a miniature landscape. The chassis hummed from the wind’s vibration — it was gusting hard today.

Today was the first of 15 opportunities to launch. Day 1 launches do happen occasionally. But for some missions, even two weeks won’t beget the combination of clear weather, pristine aurora, and no engineering issues. It’s not unheard of for entire teams to pack up and try again next year.

Across the snow, two yellow scaffolding towers aimed themselves skyward at a forty-five-degree angle. These were the launchers, and on the underside of each, encased in a Styrofoam shell, was a ready-to-launch rocket. Named by shortening their mission number, the nearest rocket was “39,” and behind it, “40.” Together, they comprised the VISIONS-2 mission.

These were sounding rockets — so-called for the nautical term “to sound,” meaning “to measure.” They vary in size, but can stand up to 65 feet tall and are usually just slim enough for a bear-hug. Sounding rockets fly anywhere from 30 to 800 miles high, carrying scientific instruments into space before falling back to Earth. The two on the launchpad carried 11 instruments between them. One rocket would spin through its flight, gathering data from all viewing angles, while the other would steady itself after launch for those experiments that required a stable view. They would launch two minutes apart along a southward trajectory, peaking around 300 miles high and landing some 15 minutes later in the Greenland Sea.

The rockets and their launchers are controlled from the blockhouse, a modest building 100 yards from the launchpad. Inside, a burly man with a bushy beard stood in the middle of a tiny room, surrounded by eight engineers. Glenn Maxfield, the launcher systems manager, was one of the leaders of the team. He spent much of his time outside, with the rocket. But right now, he and the rest of the team were staring at a temperature gauge. Something was wrong.

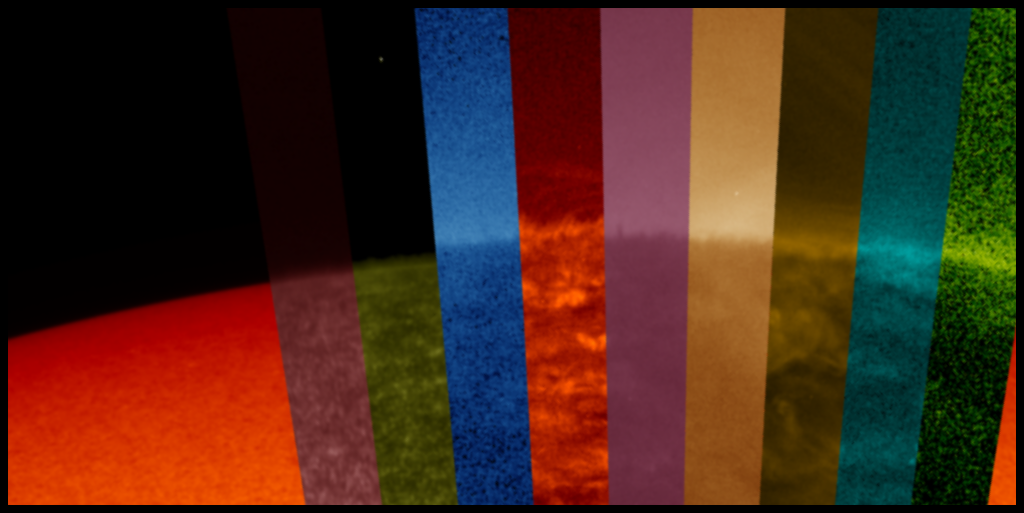

The temperature gauge monitored a special camera aboard one of the rockets known as a charge-coupled device, or CCD. The CCD camera would capture imagery of the aurora as the rocket flew through it. But to work properly, it had to be cold — below -31 degrees Fahrenheit. Too warm and it would ruin their view of the aurora, producing “dark noise” that resembles an overexposed photograph.

To keep it cold the team was using a liquid nitrogen cooling system. But nitrogen was now pooling at a U-turn in the plumbing inside the rocket. If it wasn’t fixed, the instruments could cool so quickly they could fracture.

Maxfield was on the phone with Range Control, the team that coordinated launch operations. Range Control, noting increasing winds, wanted to lower the rockets from their ready-to-launch positions.

“Right now, I don’t know how much nitrogen is in there, and if we go down, there’s the potential that it runs into the instruments,” Maxfield said. But the solution was already in the works. Maxfield had opened a valve to allow excess nitrogen to evaporate out from the rocket; he could hear it hiss as it steamed away. Now, they just had to wait. He hung up the phone and headed back out to the rocket.

A few moments later Maxfield returned, looking satisfied. The hiss had stopped. The liquid was gone, and the CCD camera had reached the target temperature. “I think we’re good,” he said.

Sounding rockets “go where you point them,” Rowland said. “Unless it’s windy. Then they go somewhere else.”

The success of a sounding rocket mission depends on fixing just these kinds of problems as they arise. But it’s at least as dependent on the weather, which is much harder to control. Ground winds could endanger a rocket still on the launchpad, but winds higher up were at least as threatening. For all their complicated mechanics, sounding rockets have no rudder, no real-time ability to steer once they’re in flight. Sounding rockets “go where you point them,” Rowland, the mission leader, said. “Unless it’s windy. Then they go somewhere else.”

So the team doesn’t take chances: The launch systems could accommodate winds up to 20 miles per hour, but no more. Gusty conditions could send the team home for the day. A prolonged storm could squander their entire two-week window.

Monitoring those winds was the job of Anders Moen and Tommy Jensen, both employees of the Andøya Space Center, the Norwegian agency responsible for operating the range. Inside the blockhouse, they were tracking a weather balloon. Their screen displayed a simplified map of Svalbard, with Ny-Ålesund at the center. A thin line squiggled across Ny-Ålesund to a point somewhere over the Arctic Ocean, marking the balloon’s current location. It was almost out of range — about time to launch another.

Moen and Jensen got up and continued into the neighboring open hangar. Next to its rolling door was a collection of giant metal gas tanks. Jensen reached for one, turned the dial, and a hollow hissing sound began. He held up a white balloon from his fist, which hung first like an empty bag then righted itself, filling rapidly until reaching a 5-foot diameter. Moen tied it to a GPS device — a small white box about the size of a paperback novel. Jensen pushed a button on the wall and the large rolling door opened.

Outside the hangar, the wind was loud, and snow tussled in front of them like tumbleweed. Jensen raised his arm, waiting for a signal on his walkie-talkie, as Moen carried the white box. A moment’s pause, a walkie-talkie confirmation, and he let it free. With a loud tearing sound, it took off like a dragster as the white box jerked from Moen’s hand, whipping frantically after the balloon. Shooting off at a diagonal, the balloon quickly disappeared into the darkness.

During launches, Moen and Jensen carried out this ritual several times a day. After release, the GPS device would send real-time data showing the balloon’s altitude, speed, and direction that allowed them to monitor high-altitude winds. In a few moments, a new line would trace across Moen and Jensen’s monitor. They turned and walked back into the hangar as the rolling door closed behind them.

Soon after, the signal from the newly launched GPS balloon was coming in, and the news wasn’t good. It was showing gusts at 37 miles per hour, well above their cutoff. Range Control radioed in and recommended “scrubbing,” or ending the launch attempt for the day. Shortly afterward, Rowland made it official.

Day 1 was over, rockets still on the ground.

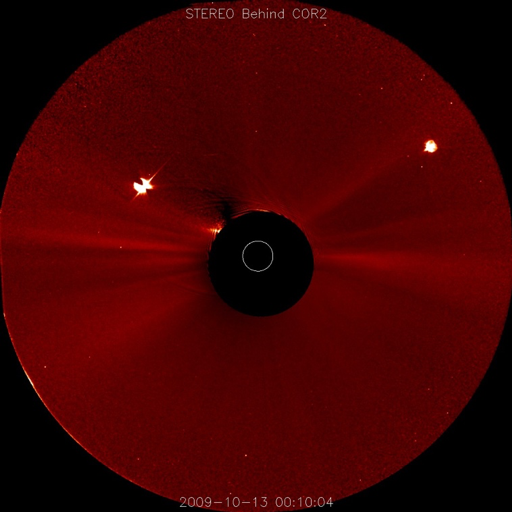

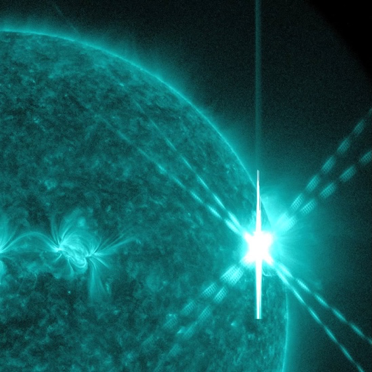

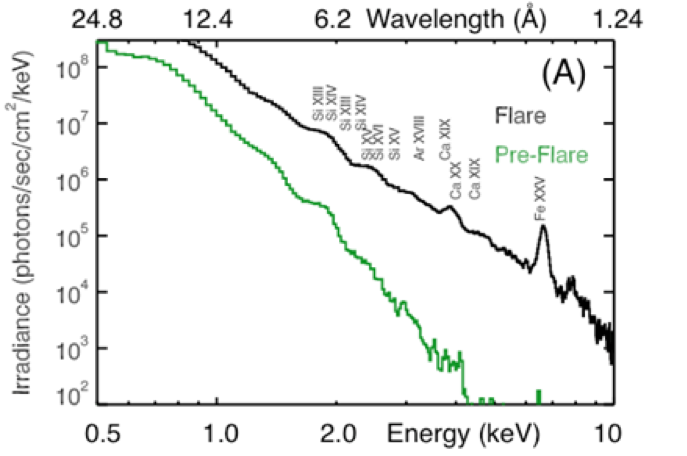

Credit: NASA

Credit: NASA