Virtual Reality environments can be a challenge to fully perceive by watching through a TV screen. However, Mixed Reality, where a camera plus software can remove a green screen background to place a user directly into the environment for all to see is hugely more collaborative.

Prior to the STEM Innovation team traveling down to Kennedy Space Center (KSC) last week, the VR development team were lucky enough to receive and quickly test a Mixed Reality setup.

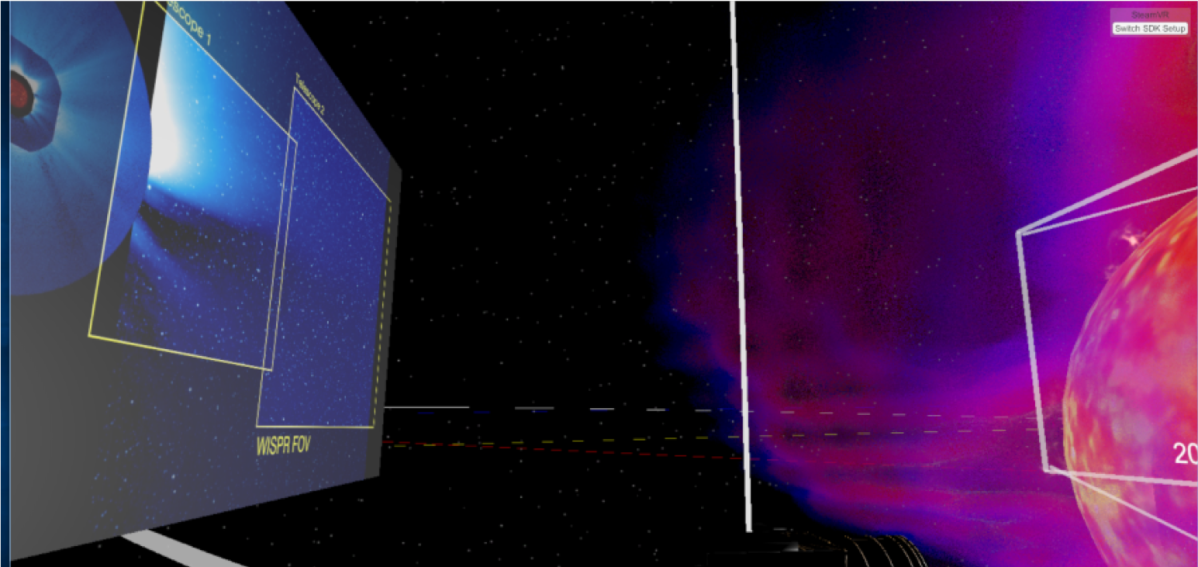

Mixed Reality, depending on what articles you read, seems to have slightly different meanings. Here we talk about Mixed Reality as a method for people to more clearly see what a VR user is seeing – by overlaying the person directly inside their VR environment and displaying it onto a TV screen.

Once we got the camera and software (we tested a product called LIV), we went about learning how to set it up for our Lab and our Space science related products. Our VR summer intern spent a few days trying, and found it challenging to setup the ‘triangulation part’ of the software. This part of the setup effectively generates a depth perception of the VR environment i.e. to estimate when a person is in front/behind a virtual object.

We did manage to get Mixed Reality working briefly, just prior to shipping our equipment to Kennedy, but we did not have the time to perform robust tests to ensure it would smoothly operate when the team went on the road. This meant the VR team were able to use the Parker launch at KSC was a great testbed for us to learn what is needed to demonstrate Mixed Reality while on tour during future conventions and conferences.

Have you tried a different system to operate Mixed reality in your Lab, or on the road? If so, let us know how you got along and what you liked about it?